Welcome to the "Sustainability in the Digital Age" series

In an era where digital technologies are reshaping industries and daily life, the environmental impact of AI systems has become a growing concern. This course explores efficient AI methodologies to address these challenges. From deep learning model compression to low-bit quantization and collaborative inference, we delve into techniques that enhance computational efficiency and reduce energy consumption. We will also focus on low-bit quantization specifically for large language models (LLMs), showcasing cutting-edge open-source tools and models. Join us to learn how to build sustainable AI systems while pushing the boundaries of innovation.

This course is part of the Sustainability in the Digital Age series, a collaborative project between colleagues from Stanford University, SAP and the Hasso Plattner Institute.

Language: English

Deutsch, English

Advanced

Course information

Is this course for me?

Prerequisites

- Basic understanding of Machine Learning and Deep Learning principles

- Proficiency in Python programming

- Familiarity with neural networks is recommended

Knowledge

There are two preparatory course options we found, which should be suitable in preparation for this course:

More comprehensive, in German: Praktische Einführung in Deep Learning für Computer Vision

Focused on the basics, in English: Week 5 of CS50’s Introduction to Artificial Intelligence with Python

Time required: The course runs for two weeks with a total workload of approximately 6-8 hours.

All learning materials (Video lectures and slides, additional reading materials and case studies, self-tests) are available from the start of the course. The final exam is activated at the end of the first week and remains open until the course ends, giving participants two weeks to complete the content and one week for the exam.

What you'll learn

- Efficient deep learning techniques with a focus on sustainability

- Principles of model compression and low-bit quantization

- Collaborative inference strategies to optimize resource usage

- Application of low-bit quantization for large language models (LLMs)

- Overview and practical use of open-source tools for efficient AI

Who this course is for

- Students

- Professionals

- Lifelong learners

Enroll me for this course

Learners

Rating

This course was rated with 4.1 stars in average from 274 votes.

Certificate Requirements

- Gain a Record of Achievement by earning at least 50% of the maximum number of points from all graded assignments.

- Gain a Confirmation of Participation by completing at least 50% of the course material.

Find out more in the certificate guidelines.

This course is offered by

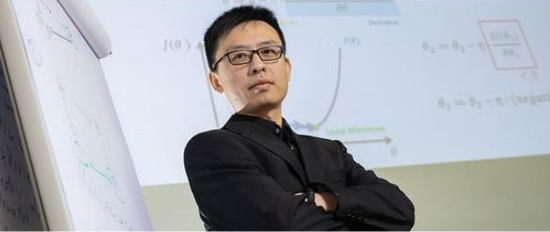

PD Dr. Haojin Yang is a senior researcher and multimedia and machine learning (MML) research group leader at Hasso-Plattner-Institute (HPI). Since 2019, he has been habilitated for a professorship. His research focuses on efficient deep learning, model acceleration and compression, and AI agentic systems.